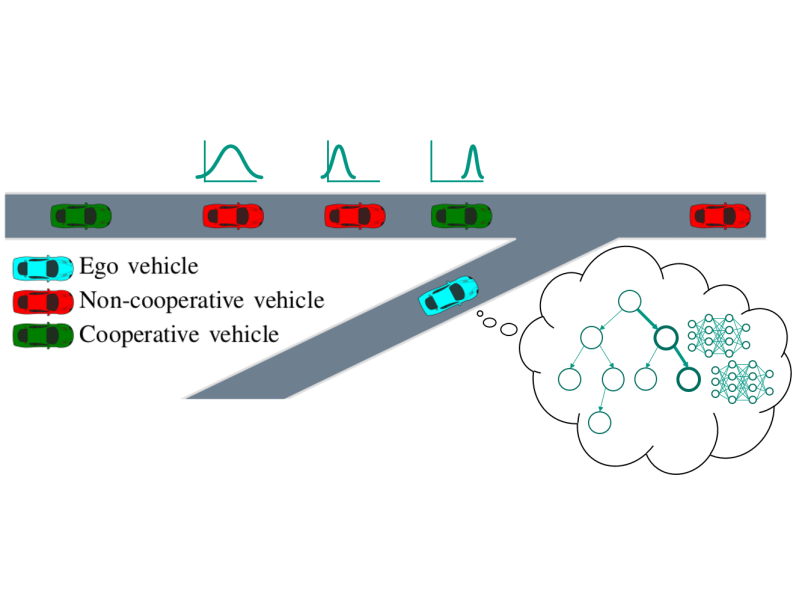

In real traffic it is necessary to make decisions under partial information. In particular, the intention of other traffic participants in important for planning. A principal framework for modelling such problems are partially observable Markov decision processes (POMDPs), which allow to optimally solve stochastic planning problems. POMDPs can be solved with Deep Reinforcement Learning or with Monte Carlo Tree Search (MCTS). We explore methods that combine both approaches to get the benefits from both: A learned neural network policy is used to guide MCTS to the promising branches of the search tree and a learned value network is used to evaluate leaf node values of the tree.

Contact: M.Sc. Johannes Fischer