Decision-Making and Motion Planning

Group Leader: Dr.-Ing. Ömer Sahin Tas

Motion planning is key to every automated physical system, and a well investigated subject in the field of robotics. In the field of automated vehicles, the task is to determine an appropriate behavior, resulting in a trajectory, i.e. the state of the vehicle as a function of time. This task is referred to as decision making or motion planning for automated vehicles, respectively. The decision is based on previously acquired knowledge about the environment, such as the drivable area and detected objects, but also traffic rules.

The key challenges in motion planning for automated driving arise from the collision risk with other traffic participants. Since human lives are at risk, safety takes the top priority. On the other hand, over-cautious behavior, sometimes referred to as “driving like a learner”, is not only inconvenient but can also cause dangerous situations, as this behavior might cause misunderstanding by humans or entice them to risky overtaking maneuvers, for example.

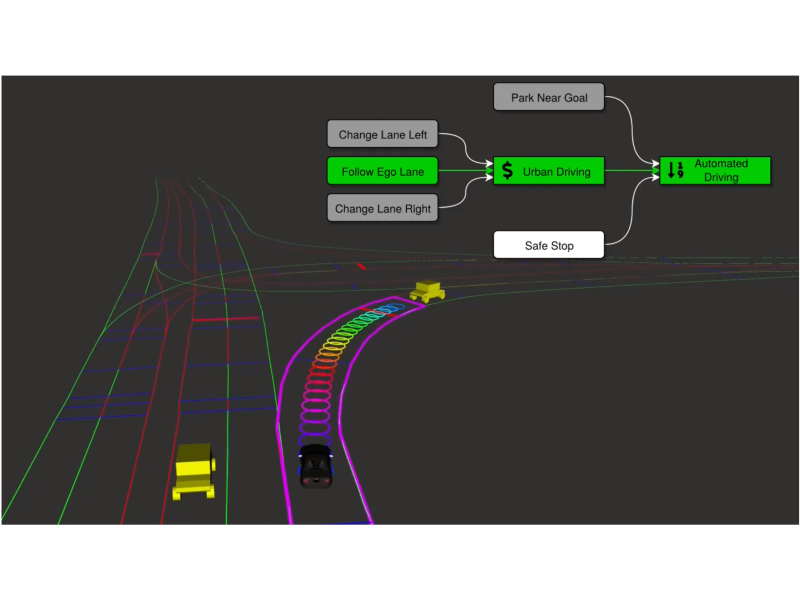

We propose a hierarchical behavior-based architecture for tactical and strategical behavior generation in automated driving. The framework utilizes modular behavior blocks to compose more complex behaviors in a bottom-up approach.

More

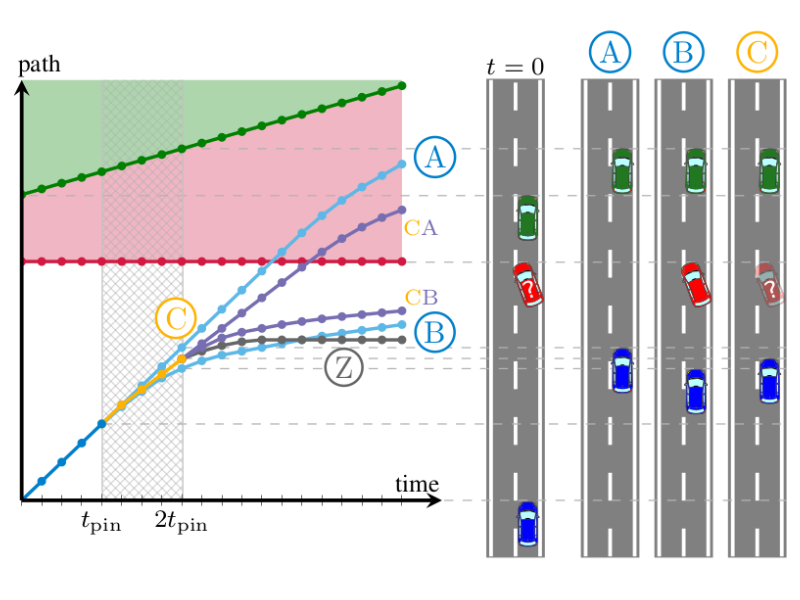

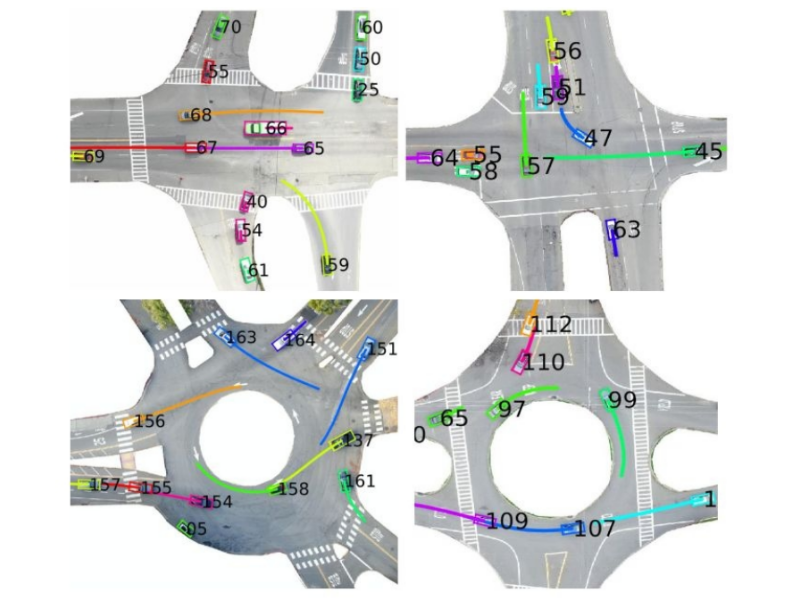

We use numerical optimization to consider multiple maneuvers and yielding a motion profile shaped by the individual probabilities of individual maneuvers. Our framework inherently postpones maneuver decisions until a later time, particularly when current uncertainty is high.

More

We focus on formal methods and extend existing concepts such as Responsibility Sensitive Safety (RSS) and set-based methods. Further, we focus on motion planning and decision making that is safe, yet not over-cautious.

More

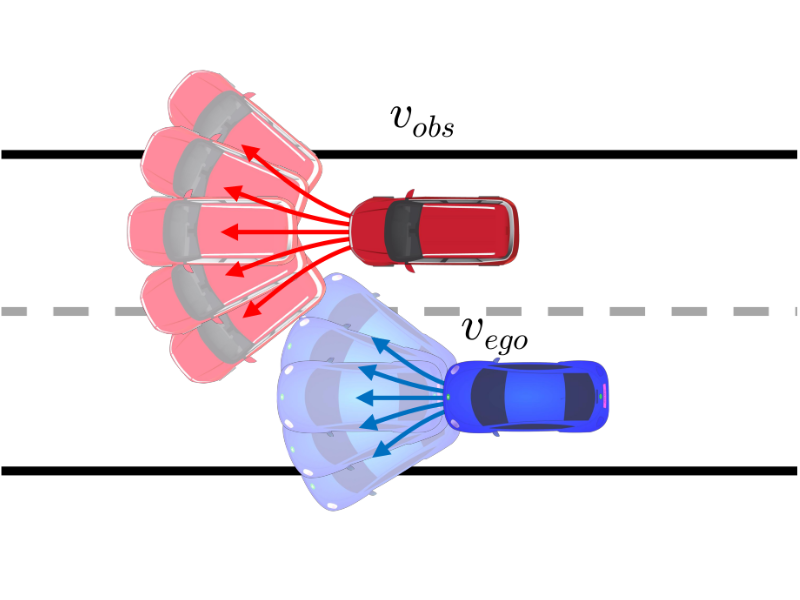

We focus on assessing realistic collision risk with other traffic participants, to enable a more human-style and safer driving behavior through the planning algorithm.

More

We investigate how inverse reinforcement learning can be used to learn a cost function from human driving data.

More

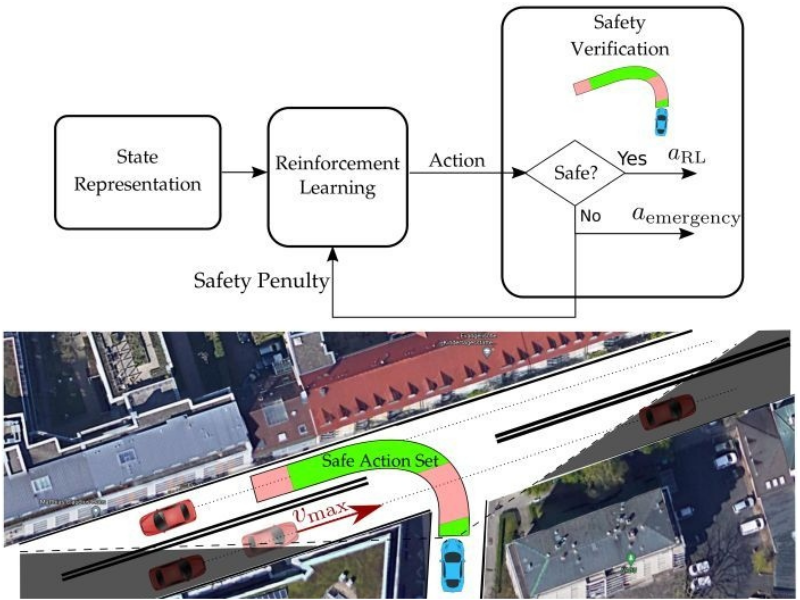

We design safety verification layers which guarantee safety of the actions generated from a reinforcement learning policy and make sure that they are fail-safe.

More

We imitate human driving behavior from recorded driving trajectories and generate high-level decisions. This approach of imitating decisions results in a method that is both interpretable and easy to track.

More

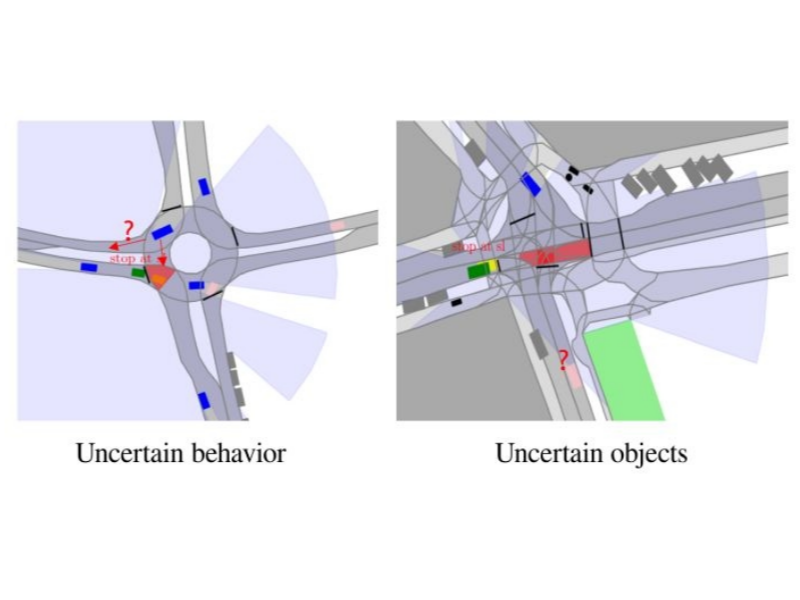

We explore methods that combine Deep Reinforcement Learning and Monte Carlo Tree Search (MCTS) approaches for decision making under uncertainty. This allows to guide MCTS to the promising branches of the search tree while considering how the uncertainty evolves in the future.

More

We leverage driver models to accelerate learning a policy with Deep Reinforcement Learning by using principles from physics-informed deep learning. This allows to regularize the policy with a driver model and results in a better generalization and a higher sample-efficiency.

More

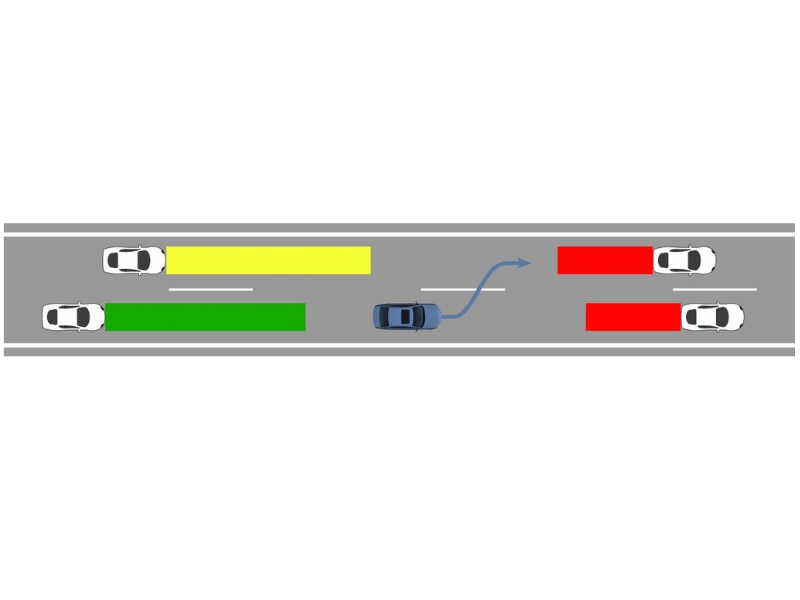

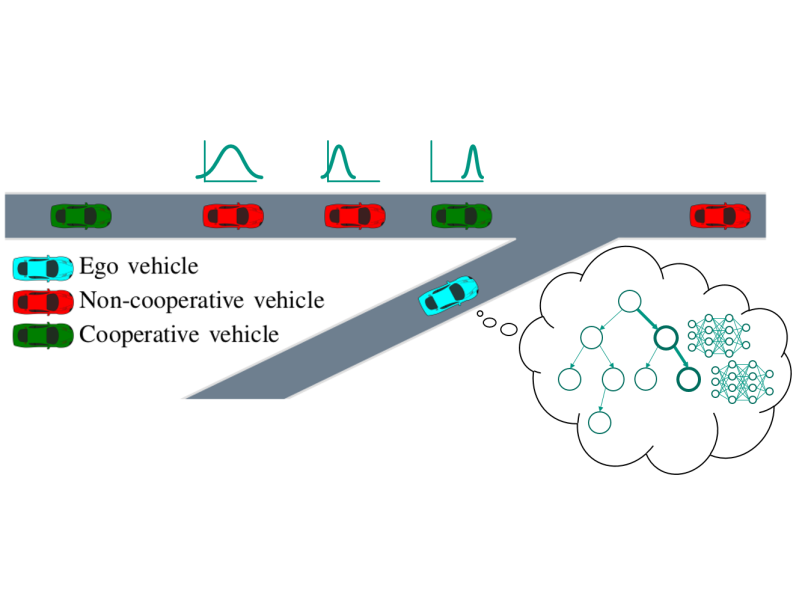

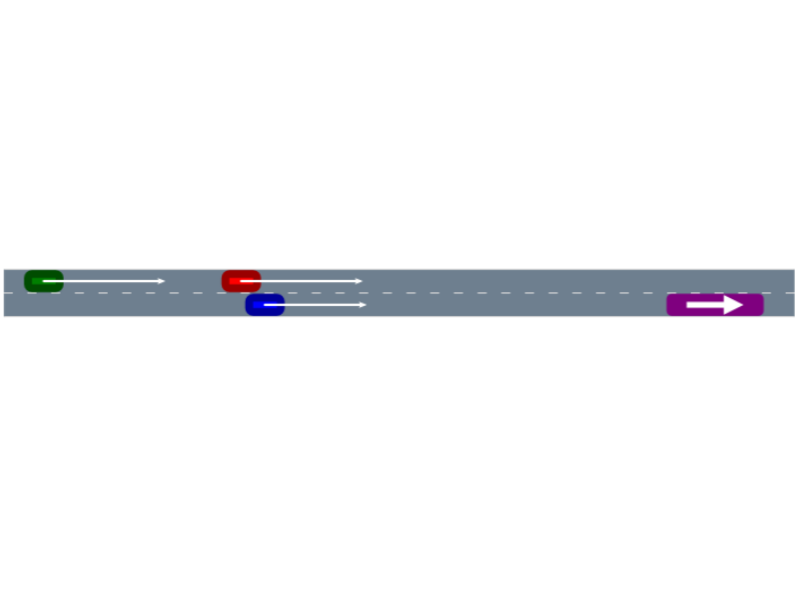

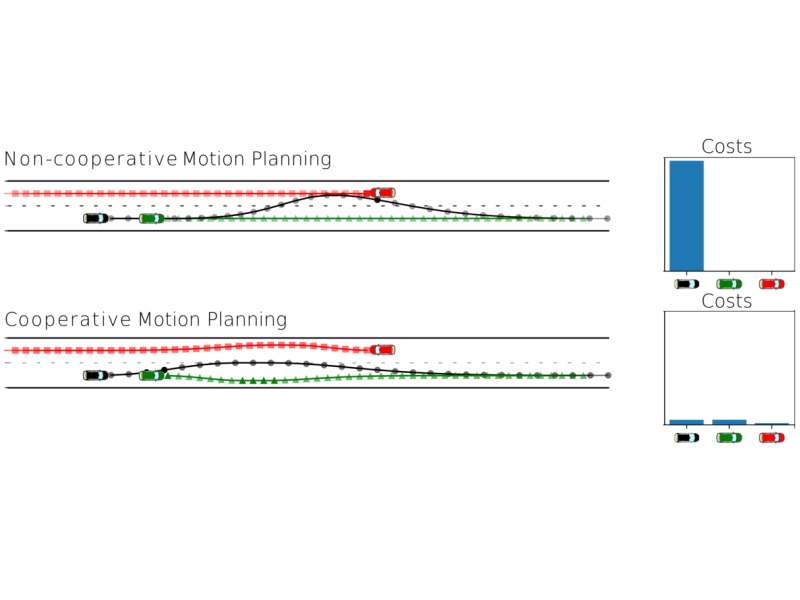

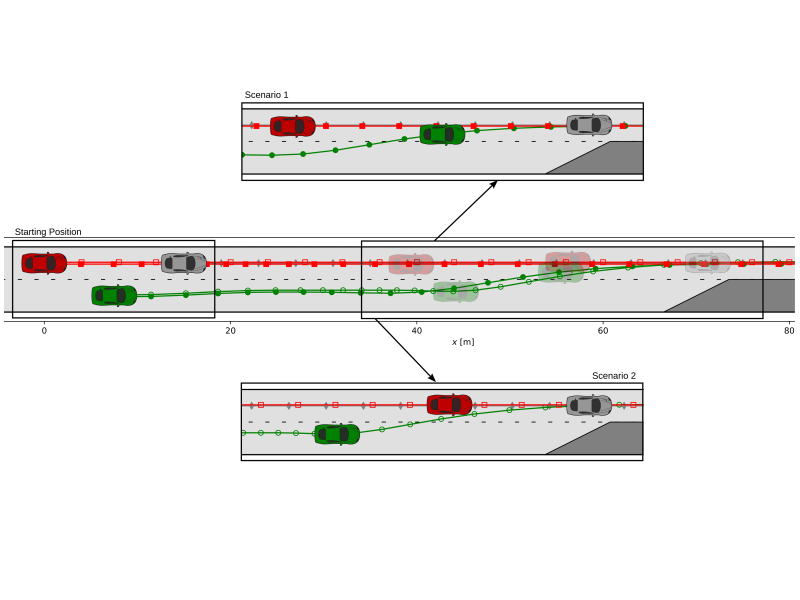

We investigate the potential of multi-vehicle motion planning. By planning the trajectories jointly, a coordinated maneuver can be performed, and an overtaking can be performed much more efficiently.

More

We focus on explicitly considering interactions between traffic participants and treat prediction and planning as a joint task. In this way, we create cooperative motion plans.

More