Localization and Mapping

Group Leader: Dr.-Ing. Jan-Hendrik Pauls

Automated driving still depends on highly accurate maps that provide enriched layered knowledge, including lane geometries, traffic rules, and traffic lights. This information is not always perceivable or the surroundings do not allow safe detection - whether it is due to bad weather or occlusions. The research group Mapping & Localization investigates challenges related to map-based automated driving and addresses the future vision of mapless driving.

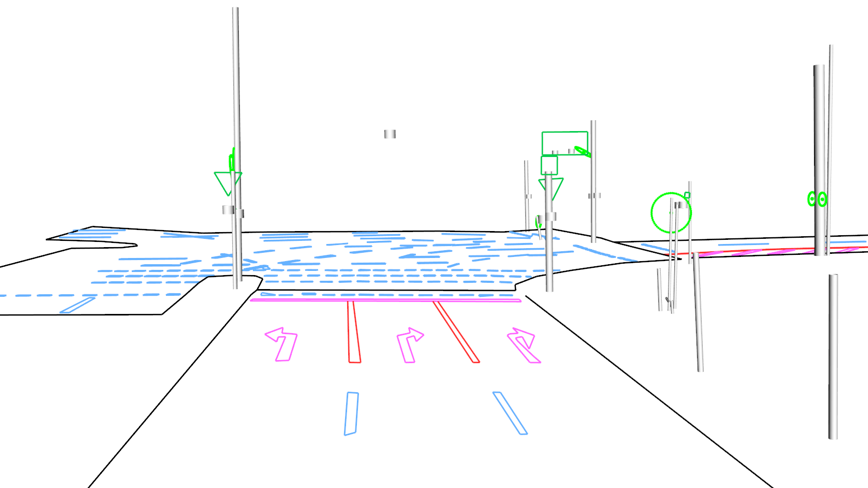

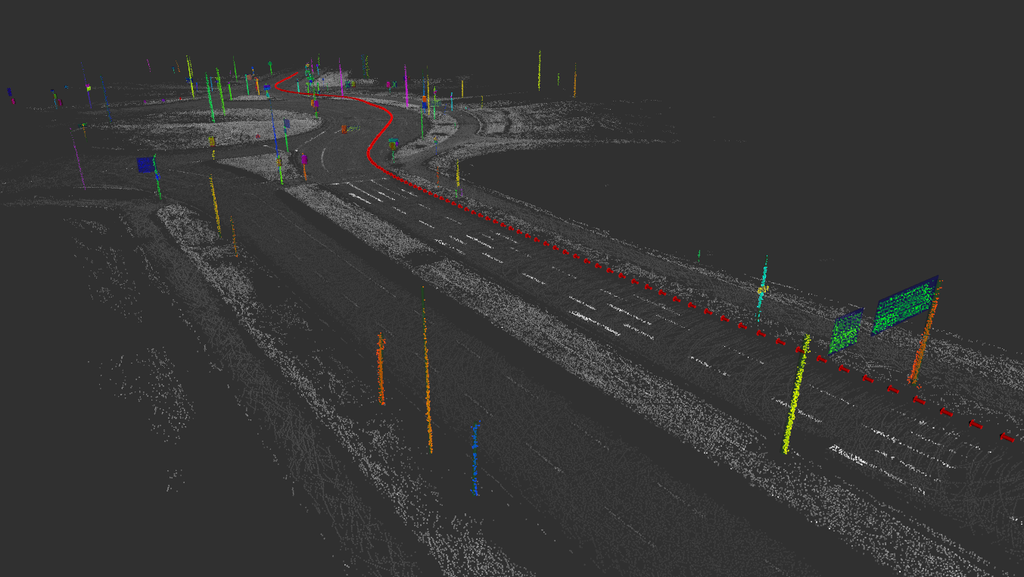

When using highly accurate maps, multiple challenges arise. First, the vehicle's ego-motion needs to be determined (Visual/Lidar/Inertial Odometry) to properly reconstruct the world around it. We create rich and dense 3D representations as well as sparse, abstract, or semantic feature maps that are compact in storage and low in maintenance. These maps not only contain physical elements but also higher-order information, such as lanes, routes, or traffic rules. The process of deriving a suitable representation from sensor data is called Mapping.

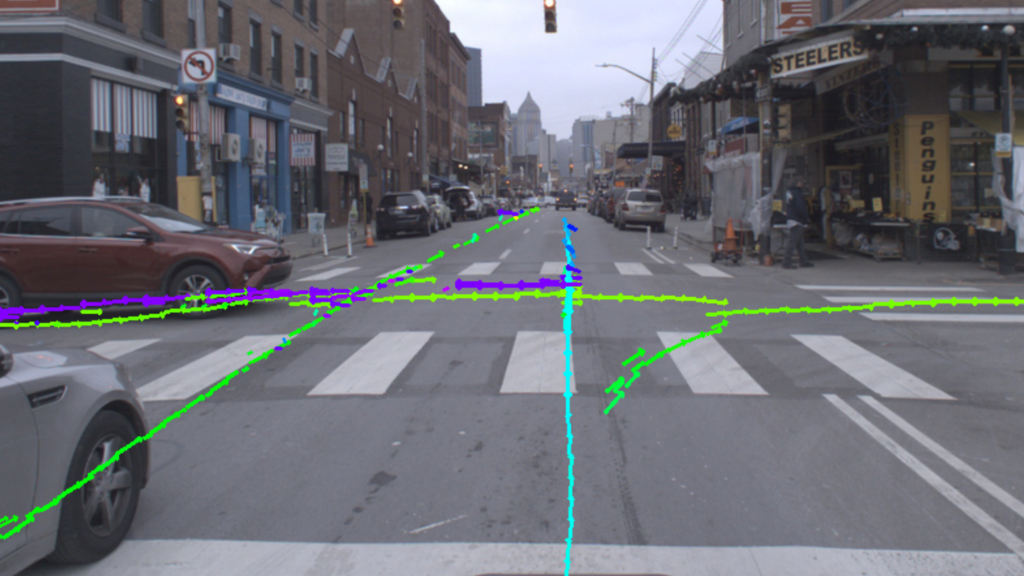

To use a map for automated driving, the automated vehicle has to be localized in the map (Localization). This is achieved by associating static map data with detected dynamic information. Since seemingly static map elements can change over time, their alterations must be detected to ensure safe driving using only correct information (Map Verification).

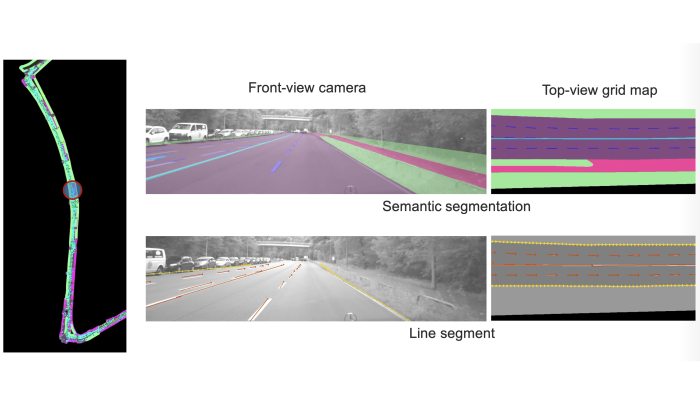

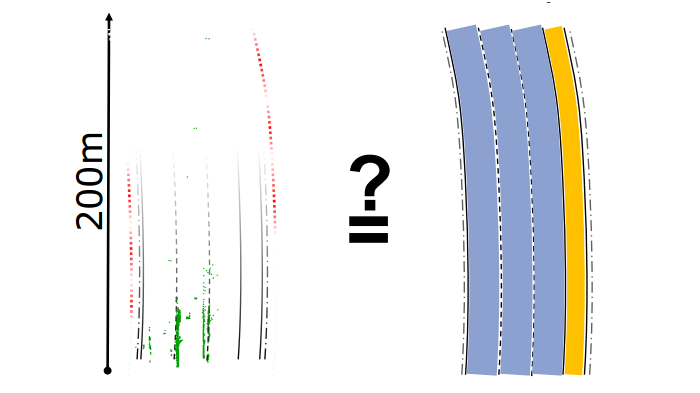

In cases where a map is outdated or the localization is misleading, an online Map Perception must be established as a fallback solution. Deep learning-based map perception methods have proven to be suitable for this task but require a lot of labeled data. Using accurate localization in verified maps, we can generate scalable ground truth data without additional labeling effort (Map Learning). Furthermore, we learn bird's-eye view representations and aim to leverage the strengths of various sensor types by fusing multimodal data. To update vectorized HD maps, we utilize graph neural networks, as they have been proven effective in representing semantic map elements and their relationships.

Scaling automatic data generation for deep learning applications using HD maps and multi-drive mapping.

More

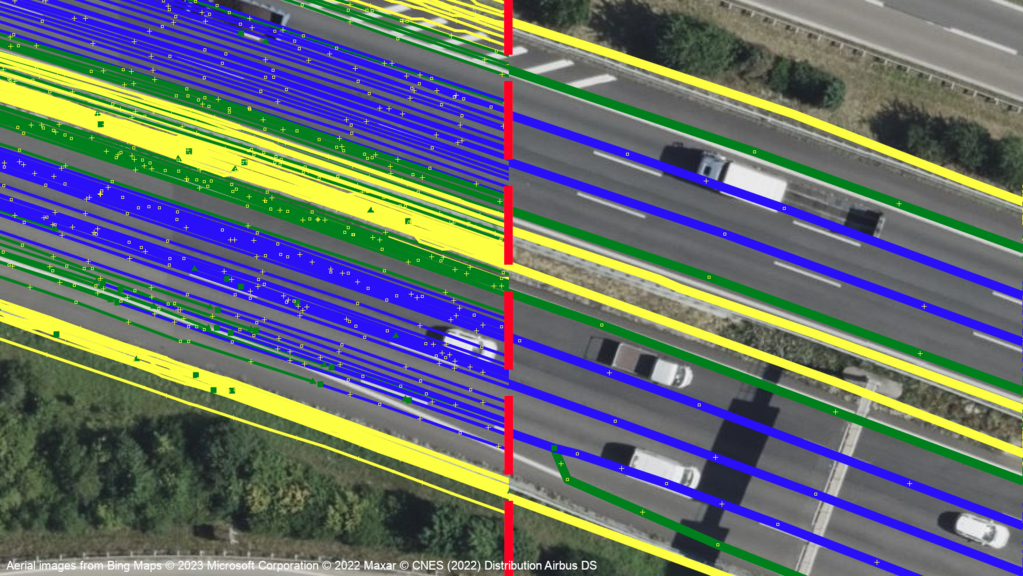

Automated coreferencing, aggregation and map generation using fleet data obtained through production vehicles

More

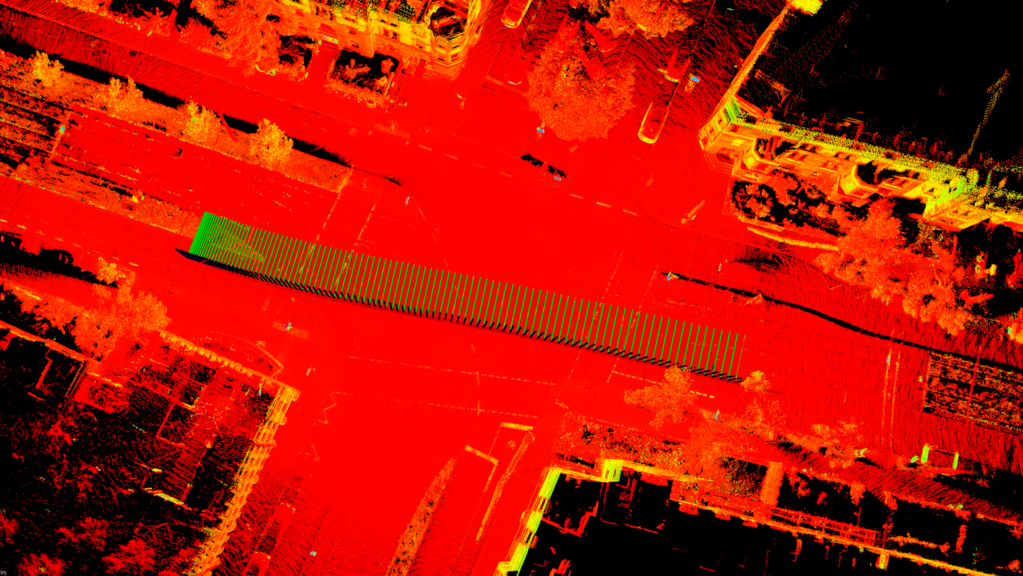

We research a continuous-time-based method that uses onboard sensors to map the environment and localize our vehicle accurately and robustly.

More

We research methods that enable localization directly in the semantic layers of an HD map. This allows saving the storage, transfer and maintenance of an additional localization layer.

More

We research a TSDF-based method to reconstruct the scene, and color/semantic texture of the 3D model accurately.

More

The automated extraction of a vectorized and parametric HD map yields a compact physical map layer and is the foundation of fully automated HD map generation.

More

We research methods that use onboard sensors to verify that semantic HD maps are still up to date and can be used safely.

More

Online perception of map information needed for automated driving from sensors.

More

Lanelet2: The open source real-world tested C++/Python automated driving HD map framework. It is designed to utilize high-definition map data to allow driving in the most complex traffic scenarios.

More