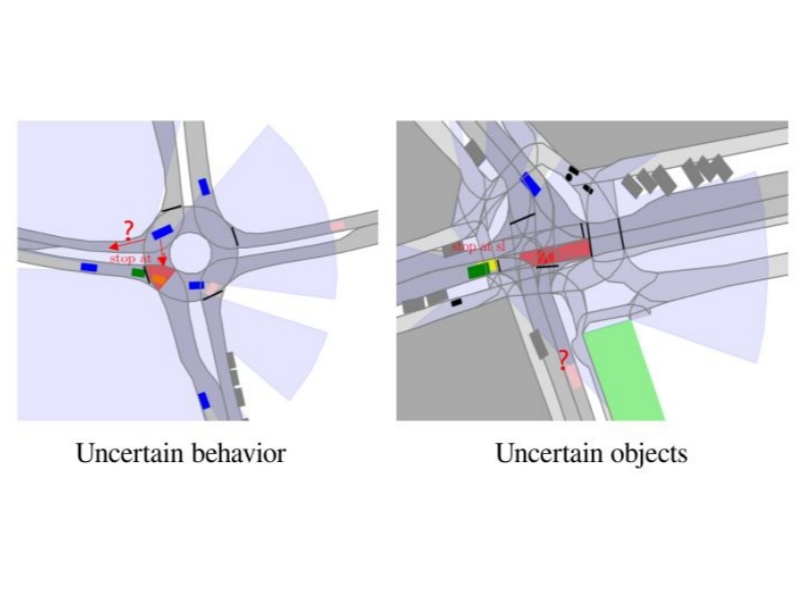

Automated driving systems need to behave as human-like as possible, especially in highly interactive scenarios. In this way, the behavior can be better interpreted and predicted by other traffic participants, in order to prevent misunderstanding, and in the worst case, accidents. We propose to imitate human driving behavior from existing human-driven trajectory datasets and generate high-level decisions for various scenarios, e.g. highway driving and unsignalized intersections. Unlike many other approaches that utilize neural networks, either for end-to-end behavior cloning or for approximating Q-functions in reinforcement learning, where their decisions are intractable to understand, the output decisions of our approach are interpretable and easy to track. Meanwhile, the driving decisions are provably safe under reasonable assumptions by generalizing the Responsibility-Sensitive Safety (RSS) concept to complex intersections. Simulation evaluations show that our learned policy produces a more human-like behavior, and meanwhile, balances driving efficiency, comfort, perceived safety, and politeness better.

Contact: M.Sc. Lingguang Wang