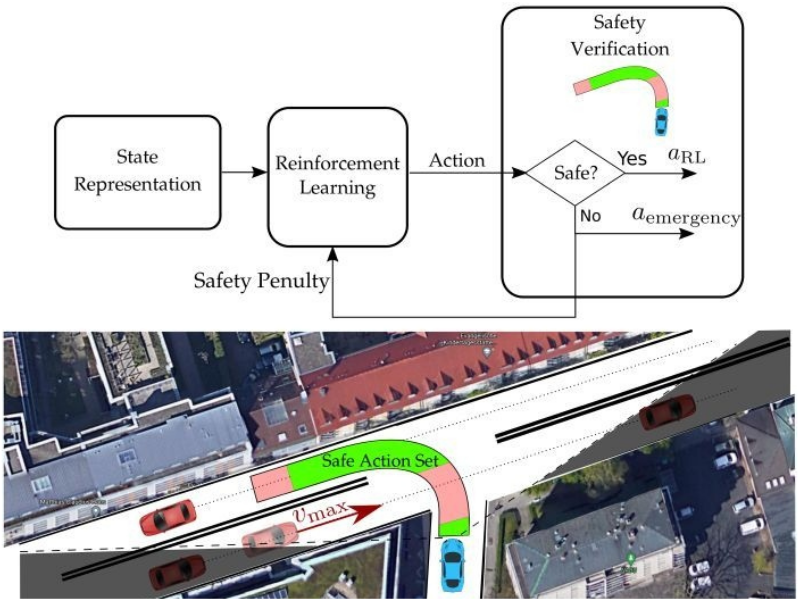

Reinforcement learning (RL) helps to learn policies which can predict the current situation and generate long term optimal actions based on that. Applications of RL policies in safety critical domains like automated driving with perception uncertainties are always challenging. For that, we design safety verification layers which guarantee safety of the generated actions from RL and make sure that they are fail-safe. By providing appropriate rewarding scheme, we aim to learn policies that are always safe and still generate long term optimal behaviors.

Contact: M.Sc. Johannes Fischer and M.Sc. Marlon Steiner